Memory addresses are an essential concept in computer systems, allowing the efficient storage and retrieval of data. A memory address refers to a unique identifier assigned to each byte or word in the computer’s memory. It serves as a location marker that enables the processor to locate, read, and write data in memory. Understanding memory addresses is crucial for programmers, computer scientists, and anyone working with computer systems. In this explanation, we will delve into the intricacies of memory addresses, their significance, and their role in computer operations.

At its core, a memory address is a numeric value that points to a specific location in the computer’s memory. Just like the street address of a house identifies its location, a memory address provides the processor with the precise location of a byte or word in the memory. This allows the processor to access data quickly and efficiently. Without memory addresses, the computer would struggle to store and retrieve information, rendering it nearly impossible to perform complex tasks.

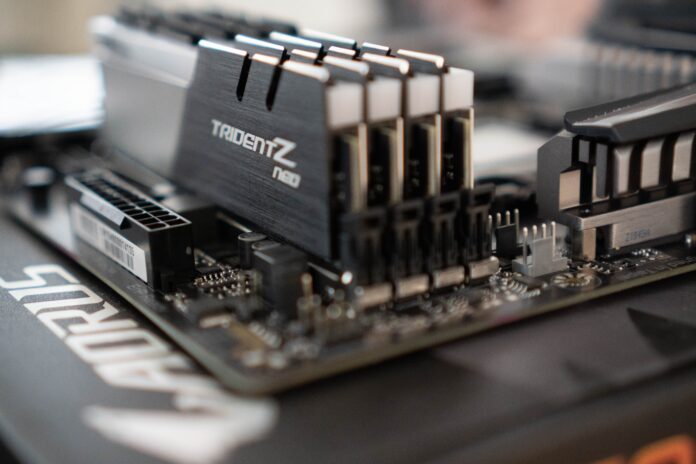

Memory addresses are typically expressed as hexadecimal numbers, consisting of digits ranging from 0 to 9 and letters from A to F. The size of a memory address is determined by the computer’s architecture and the width of the memory bus. For instance, in a 32-bit architecture, memory addresses are usually represented by 32 bits or 4 bytes, allowing for a maximum of 4,294,967,296 unique memory locations. In a 64-bit architecture, memory addresses are 64 bits or 8 bytes in size, providing a vastly larger addressable memory space of 18,446,744,073,709,551,616 locations.

Now, let’s explore five important aspects related to memory addresses:

1. Addressable Memory Space: Memory addresses define the total range of memory locations that can be accessed by a computer system. The size of the memory bus and the architecture play a crucial role in determining the addressable memory space. In 32-bit systems, the maximum addressable memory is 4 GB (gigabytes), while 64-bit systems can address an astounding 18.4 million TB (terabytes) of memory. The increasing availability of 64-bit architectures has greatly expanded the memory capacity of modern computers, enabling more extensive data processing and storage capabilities.

2. Memory Allocation: Memory addresses are pivotal in memory allocation, which refers to the process of reserving and assigning memory space for specific data or programs. When a program is executed, it requires memory to store variables, instructions, and other data. Memory allocation can be static or dynamic. In static memory allocation, memory is assigned at compile-time and remains fixed throughout the program’s execution. Dynamic memory allocation, on the other hand, allows memory to be allocated and deallocated at runtime, providing flexibility but also requiring careful management to prevent memory leaks or excessive memory usage.

3. Pointers and Data Structures: Pointers, another fundamental concept in programming, rely heavily on memory addresses. A pointer is a variable that stores the memory address of another variable. It allows programmers to manipulate and access data indirectly by referring to the memory location where the data is stored. Pointers are particularly useful when working with complex data structures such as linked lists, trees, and graphs. By utilizing memory addresses, programmers can efficiently traverse and manipulate these data structures, optimizing memory usage and computational efficiency.

4. Memory Access and Performance: Efficient memory access is crucial for optimal system performance. Memory addresses enable the processor to locate and retrieve data from memory quickly. Memory is organized into smaller units called memory blocks or pages, and the processor typically reads or writes data in these blocks rather than individual bytes. By fetching data in contiguous memory locations, known as spatial locality, the processor can minimize memory access time and improve performance. Understanding memory addresses and their impact on memory access patterns allows programmers to write code that maximizes efficiency and minimizes unnecessary memory transfers.

5. Memory Protection and Virtual Memory: Memory addresses play a vital role in memory protection and virtual memory systems. Memory protection ensures that programs cannot access or modify memory locations that they are not authorized to access. By associating access permissions with memory addresses, the operating system prevents unauthorized access, enhancing system security and stability. Virtual memory systems use memory addresses to map physical memory to virtual memory spaces, enabling efficient memory management and multitasking. Virtual memory allows programs to address more memory than physically available by swapping data between RAM and secondary storage devices, such as hard drives.

Memory addresses are essential for computer systems, enabling efficient data storage, retrieval, and manipulation. They serve as unique identifiers that allow the processor to access specific memory locations. Understanding memory addresses is crucial for programmers and computer scientists to optimize performance, manage memory effectively, and develop complex data structures and algorithms. The concepts discussed here provide a foundation for delving deeper into memory management, computer architecture, and system-level programming.

Memory addresses are not only used by programmers but also by the operating system to manage system resources effectively. The operating system assigns memory addresses to different processes, ensuring that they do not overlap and interfere with each other’s memory space. This memory address translation is crucial for creating a secure and stable environment for multiple programs to run concurrently.

Moreover, memory addresses are utilized in the context of inter-process communication. When multiple processes need to exchange data, they can do so by sharing memory addresses. By agreeing upon a common memory location, processes can read from and write to that location, enabling efficient communication and data sharing. This mechanism is particularly useful in scenarios where processes need to collaborate or exchange large amounts of data quickly.

Memory addresses also come into play when dealing with low-level programming, such as device drivers and operating system kernels. These software components often need to access hardware directly, and memory-mapped I/O is used for this purpose. In memory-mapped I/O, specific memory addresses are assigned to represent hardware registers or control registers. By reading from and writing to these memory addresses, the software can communicate with the hardware and control its behavior. Memory addresses serve as a bridge between software and hardware, facilitating interaction and control.

It is worth noting that memory addresses are subject to certain limitations and considerations. For instance, memory addresses are typically aligned to specific boundaries based on the size of the data being accessed. This alignment ensures efficient memory access and prevents performance penalties. Additionally, memory addresses can be impacted by memory management techniques, such as memory segmentation or paging. These techniques allow for efficient memory allocation and virtual memory management, but they introduce additional layers of address translation and management.

In summary, memory addresses are integral to computer systems, enabling efficient data storage, retrieval, and manipulation. They serve as unique identifiers that allow the processor to locate and access specific memory locations. Memory addresses have broad applications, ranging from memory allocation and data structure manipulation to system-level resource management and low-level hardware interaction. Understanding memory addresses is crucial for programmers and computer scientists, as it provides a foundation for optimizing performance, managing memory effectively, and developing complex software systems.