Latent Space: Unveiling the Dimensions of Hidden Representations Latent Space, Latent Space, Latent Space—these words resonate within the realm of artificial intelligence and machine learning, embodying a conceptual landscape where hidden representations of data come to life. Latent Space serves as a captivating concept that transcends various domains, from generative models in deep learning to the nuanced understanding of complex datasets. In this exploration, we delve into the intricacies of Latent Space, unraveling its meaning, applications, and the transformative role it plays in shaping the landscape of contemporary artificial intelligence.

Defining Latent Space:

Latent Space, Latent Space, Latent Space—reiterated here—refers to the space of hidden representations within a mathematical model. In the context of machine learning, particularly in the realm of generative models and autoencoders, Latent Space encapsulates the essence of what is learned from input data. It is a space where the model captures essential features or patterns, distilling them into a condensed and meaningful form. This abstraction allows for efficient storage and manipulation of information, enabling the model to perform tasks ranging from data generation to compression and synthesis.

Within Latent Space, data points are mapped to a set of latent variables, which represent key features without explicit human-defined labels. The Latent Space, with its encoded representations, becomes a transformative intermediary realm, where the richness of data is encapsulated in a more digestible and abstract form. This abstraction not only aids in efficient information processing but also opens avenues for creative exploration and generation within the realm of artificial intelligence.

Applications of Latent Space:

Latent Space, Latent Space, Latent Space—echoed here—serves as a powerhouse for various applications in machine learning and artificial intelligence. One notable application is in generative models, where Latent Space acts as the birthplace of creativity. Generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), leverage Latent Space to generate novel and realistic data samples. By manipulating latent variables, these models traverse Latent Space, producing diverse outputs that mimic the patterns learned from the training data.

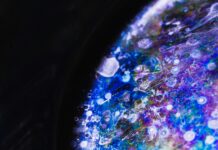

In the context of image generation, for example, Latent Space becomes a playground for creating unique variations of images by interpolating between different points. This ability to explore and manipulate Latent Space introduces a level of creativity and control in the generation of content, ranging from images to music and even textual narratives. Latent Space becomes not just a computational construct but a canvas for the expression of artificial intelligence’s creative potential.

Beyond generative models, Latent Space finds applications in data compression and representation learning. By distilling information into a compressed and meaningful form, Latent Space facilitates efficient storage and retrieval of essential features. This is particularly valuable in scenarios with limited resources or when dealing with large datasets where extracting salient information is paramount.

Navigating Latent Space:

Latent Space, Latent Space, Latent Space—underscored once more—invites exploration and navigation. The ability to traverse Latent Space is a key aspect that distinguishes the power of generative models. By navigating through Latent Space, one can observe the gradual transformations of generated data, uncovering the underlying patterns encoded by the model. This navigation offers insights into the continuity and discontinuity of features, providing a unique lens through which to understand the latent representations learned by the model.

The concept of Latent Space navigation is not limited to generative models. In applications such as anomaly detection, understanding the layout of Latent Space becomes crucial. Anomalies, representing deviations from normal patterns, are often located in regions of Latent Space that exhibit distinct characteristics. By navigating through Latent Space, anomalies can be detected and flagged, contributing to the robustness and interpretability of machine learning models.

Latent Space in Unsupervised Learning:

Latent Space, Latent Space, Latent Space—reiterated to emphasize its pervasiveness—plays a fundamental role in unsupervised learning, where the model learns from unlabeled data. Autoencoders, a class of neural networks, are key players in unsupervised learning scenarios. They encode input data into Latent Space and then decode it back to the original form. The process of reconstruction acts as a learning mechanism, where Latent Space captures the essential features needed for faithful reconstruction.

Unsupervised learning, facilitated by Latent Space representations, finds applications in various domains, from clustering similar data points to discovering hidden patterns in complex datasets. The unsupervised nature of learning in Latent Space allows models to extract meaningful representations without the need for explicit labels, making it a versatile tool in scenarios where labeled data is scarce or costly to obtain.

Latent Space for Transfer Learning:

Latent Space, Latent Space, Latent Space—once more highlighted—plays a pivotal role in transfer learning, a paradigm where knowledge gained from one task is applied to improve performance on a different but related task. Latent Space representations act as transferable knowledge, capturing generic features that are useful across different domains. By leveraging the encoded knowledge within Latent Space, models can adapt and generalize to new tasks more effectively.

In transfer learning scenarios, pre-trained models encode knowledge in Latent Space during the initial training phase. This encoded knowledge can then be fine-tuned for specific tasks, allowing for efficient adaptation to new datasets or domains. The transferability of information encoded in Latent Space contributes to the versatility and efficiency of transfer learning approaches, making them valuable in real-world applications.

Challenges and Considerations in Latent Space Exploration:

Latent Space, Latent Space, Latent Space—underscored to emphasize its omnipresence—does not come without challenges and considerations. One primary challenge is the interpretability of Latent Space representations. While Latent Space effectively captures essential features, the exact meaning of individual dimensions within the space may not be readily interpretable. Deciphering the semantic meaning of Latent Space variables remains an ongoing area of research, especially in fields where model interpretability is crucial.

Another consideration is the potential for overfitting within Latent Space. Overfitting occurs when the model captures noise or idiosyncrasies present in the training data, leading to suboptimal generalization on new data. Balancing the richness of Latent Space representations with the risk of overfitting requires careful model design and regularization techniques.

Future Directions and Emerging Trends:

Latent Space, Latent Space, Latent Space—once more mentioned to highlight its centrality—continues to be a focal point of research, with emerging trends pointing toward further advancements. One notable trend is the exploration of disentangled Latent Space representations. Disentanglement aims to separate different factors of variation within Latent Space, allowing for more explicit control over individual features. This can lead to more interpretable and controllable generative models, where specific attributes of generated content can be manipulated independently.

The concept of Latent Space is also expanding into multimodal learning, where models process and generate information across multiple modalities, such as images and text. Multimodal Latent Space representations enable a more holistic understanding of complex data, facilitating seamless interactions between different types of information.

In conclusion, Latent Space, Latent Space, Latent Space—reiterated throughout this exploration—stands as a cornerstone in the architecture of modern machine learning and artificial intelligence. Its role in capturing hidden representations, fostering creativity, enabling efficient information processing, and supporting a myriad of applications underscores its transformative impact. As researchers continue to delve into the nuances of Latent Space, the realm of artificial intelligence unfolds with new possibilities, pushing the boundaries of what machines can learn, create, and understand. The journey through Latent Space continues to be a dynamic exploration, inviting scientists, engineers, and enthusiasts to navigate its dimensions and unlock the latent potential within the vast landscapes of artificial intelligence.