TinyML is a cutting-edge field of technology that combines machine learning and embedded systems to enable the deployment of intelligent applications on resource-constrained devices. It brings the power of machine learning algorithms and models to tiny devices such as microcontrollers, sensors, and other low-power devices. This emerging field has gained significant attention and has the potential to revolutionize various industries by enabling smart and autonomous functionalities in devices that were once limited in their computational capabilities.

TinyML, short for Tiny Machine Learning, focuses on bringing the benefits of machine learning to the edge of the network, where data is generated and actions need to be taken in real-time. By deploying machine learning models directly on small devices, TinyML enables these devices to make intelligent decisions and perform complex tasks without relying on cloud-based services or external infrastructure. This localized approach has numerous advantages, including reduced latency, enhanced privacy and security, and improved energy efficiency.

One of the key motivations behind TinyML is the increasing demand for intelligent devices that can operate in real-world environments with limited resources. Traditional machine learning approaches require significant computational power and memory, making it impractical to deploy them on small, resource-constrained devices. However, with advancements in hardware, algorithms, and model optimization techniques, TinyML has emerged as a solution to this problem. It allows devices to perform tasks such as image recognition, voice detection, predictive maintenance, and anomaly detection directly on the device, without relying on cloud computing or continuous internet connectivity.

The field of TinyML has seen remarkable progress in recent years, thanks to the convergence of several technological advancements. These include the development of efficient machine learning algorithms specifically designed for resource-constrained devices, the availability of low-power microcontrollers with embedded AI accelerators, and the optimization of machine learning models for deployment on these devices. This convergence has paved the way for a new era of intelligent devices that can operate independently and efficiently in real-world scenarios.

TinyML has wide-ranging applications across various industries and domains. In the healthcare sector, for example, TinyML enables the development of wearable devices that can monitor vital signs, detect anomalies, and provide timely alerts for potential health risks. In agriculture, TinyML can be used to create smart sensors that monitor soil conditions, weather patterns, and crop health, allowing farmers to optimize irrigation, fertilization, and pest control processes. In industrial settings, TinyML enables predictive maintenance, where devices can analyze sensor data in real-time to detect potential equipment failures before they occur, minimizing downtime and improving operational efficiency.

The development of TinyML applications involves several key considerations. Model optimization plays a crucial role in reducing the size of machine learning models and optimizing their computational requirements. Techniques such as quantization, pruning, and compression are employed to reduce the memory footprint and improve inference speed without significantly sacrificing accuracy. Additionally, developers need to carefully choose and implement machine learning algorithms that are well-suited for resource-constrained devices, striking a balance between performance and resource utilization.

Another aspect of TinyML development is the collection and management of training data. Due to limited resources on small devices, data collection must be performed efficiently, taking into account the power constraints and storage limitations. Data augmentation techniques are often employed to expand the available training dataset and improve model generalization. Furthermore, developers need to consider the privacy and security implications of deploying machine learning models on edge devices, ensuring that sensitive data is protected and secure.

The success of TinyML relies not only on technological advancements but also on a thriving ecosystem of tools, frameworks, and resources. Many organizations and communities have emerged to support the development and adoption of TinyML. These communities provide open-source libraries, development boards, and resources for model training and deployment. In addition, the availability of pre-trained models and transfer learning techniques makes it easier for developers to start building TinyML applications without extensive machine learning expertise.

In conclusion, TinyML represents a significant advancement in the field of machine learning, enabling the deployment of intelligent applications on resource-constrained devices. It brings the power of machine learning algorithms to the edge of the network, allowing devices to make intelligent decisions in real-time without relying on cloud services. With its potential to transform various industries, TinyML opens up new possibilities for the development of smart, autonomous devices that can operate independently and efficiently in real-world environments. As advancements in hardware, algorithms, and optimization techniques continue, we can expect to see an increasing adoption of TinyML and the proliferation of intelligent devices that enhance our daily lives.

Resource-constrained deployment:

TinyML enables the deployment of machine learning models on resource-constrained devices such as microcontrollers, sensors, and low-power devices. This allows intelligent applications to run directly on the edge, reducing the reliance on cloud-based services.

Real-time decision-making:

By deploying machine learning models on edge devices, TinyML enables real-time decision-making without the need for constant internet connectivity or communication with external servers. This results in faster response times and improved efficiency in applications that require quick actions.

Privacy and security:

With TinyML, sensitive data can be processed locally on the device, reducing the need to transmit it over the network. This enhances privacy and security, as data remains within the device’s control, mitigating the risks associated with transmitting data to external servers.

Energy efficiency:

TinyML focuses on optimizing machine learning models and algorithms for low-power devices. By reducing the computational and memory requirements of models, TinyML enables energy-efficient operation, extending the battery life of devices and minimizing power consumption.

Versatile applications:

TinyML has a wide range of applications across industries such as healthcare, agriculture, industrial automation, and more. It enables the development of wearable devices, smart sensors, predictive maintenance systems, and other intelligent solutions that can operate autonomously and efficiently on edge devices.

TinyML, the exciting intersection of machine learning and embedded systems, has been making waves in the technology industry. This innovative field focuses on deploying intelligent applications on resource-constrained devices, revolutionizing the way we interact with technology. With the advent of TinyML, the possibilities for smart, autonomous devices have expanded significantly, enabling them to make intelligent decisions and perform complex tasks without relying on cloud-based services or external infrastructure.

One of the remarkable aspects of TinyML is its ability to bring the power of machine learning algorithms to the edge of the network. Traditionally, machine learning models have required substantial computational power and memory, making it impractical to deploy them on small, resource-constrained devices. However, TinyML overcomes these limitations by optimizing models and algorithms to fit within the constraints of such devices. This localized approach allows for faster response times, reduced latency, and improved efficiency in various applications.

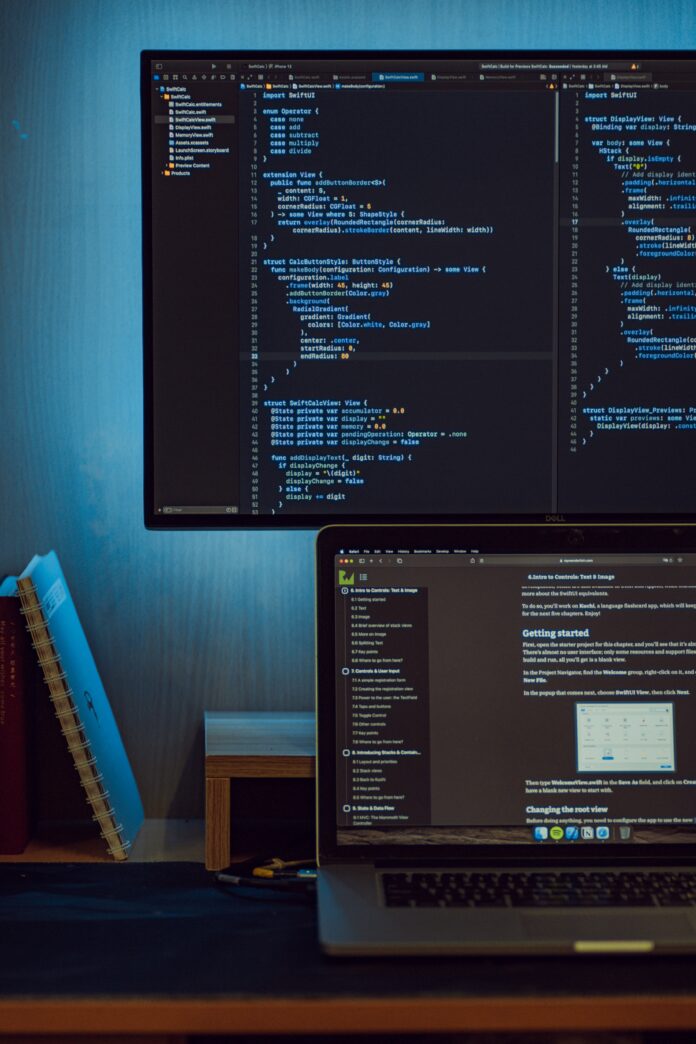

The development and adoption of TinyML have been driven by advancements in both hardware and software. On the hardware front, microcontrollers and system-on-chips (SoCs) with embedded artificial intelligence (AI) accelerators have become increasingly common. These specialized hardware components are designed to perform machine learning computations efficiently, even in power-constrained environments. This hardware acceleration allows TinyML models to run with greater efficiency and reduced energy consumption.

In addition to hardware advancements, the availability of optimized software frameworks and libraries has played a crucial role in the widespread adoption of TinyML. These frameworks provide developers with the necessary tools to train, optimize, and deploy machine learning models on resource-constrained devices. They offer support for various types of models, such as deep neural networks, decision trees, and support vector machines, enabling a wide range of applications in fields like computer vision, natural language processing, and predictive analytics.

One of the key challenges in TinyML development is the optimization of models for deployment on small devices. Model optimization techniques aim to reduce the memory footprint and computational requirements of machine learning models, without significantly sacrificing accuracy. Techniques such as quantization, which reduces the precision of model weights, and pruning, which removes redundant connections, can significantly shrink the size of models and improve their efficiency. These optimization techniques ensure that models can fit within the limited resources of tiny devices, enabling their deployment and execution with minimal overhead.

The field of TinyML has also seen progress in the development of efficient training techniques for small-scale datasets. Traditional machine learning approaches often require large amounts of data to train accurate models. However, on resource-constrained devices, collecting and storing extensive datasets may not be feasible due to limited storage capacity and power constraints. To address this challenge, researchers have explored techniques like transfer learning and data augmentation. Transfer learning allows models trained on large datasets to be fine-tuned on smaller, domain-specific datasets, while data augmentation involves artificially expanding the training dataset by applying various transformations to existing data. These approaches help improve model generalization and enable effective training on limited data.

Another important consideration in TinyML development is the integration of models with the overall system architecture. Since tiny devices often work in tandem with other hardware components, software modules, or connectivity interfaces, it is essential to ensure seamless integration and interoperability. This involves designing interfaces and APIs that enable smooth communication between the model and the surrounding system, as well as handling synchronization, data exchange, and error handling. By integrating TinyML models effectively into the larger system, developers can create cohesive and efficient intelligent applications.

Beyond the technical aspects, the impact of TinyML extends to various industries and domains. In healthcare, TinyML enables the development of wearable devices that can continuously monitor vital signs, detect anomalies, and provide timely alerts for potential health risks. This has the potential to revolutionize healthcare by facilitating remote patient monitoring, improving early detection of medical conditions, and enhancing overall well-being. In agriculture, TinyML can be used to develop smart sensors that monitor soil conditions, weather patterns, and crop health, enabling farmers to optimize irrigation, fertilization, and pest control processes. This promotes sustainable agriculture practices, reduces waste, and increases crop yields. In industrial automation, TinyML enables predictive maintenance, where devices can analyze sensor data in real-time to detect potential equipment failures before they occur. This predictive capability helps minimize downtime, optimize maintenance schedules, and improve operational efficiency.

Furthermore, TinyML empowers developers and innovators to create novel applications and solutions that were once thought to be beyond the capabilities of resource-constrained devices. From smart home automation to environmental monitoring, from gesture recognition to personal assistant devices, the possibilities are vast. With TinyML, the boundaries of what small devices can achieve are continually being pushed, opening up new avenues for creativity and innovation.

In conclusion, TinyML represents a paradigm shift in the deployment of intelligent applications on resource-constrained devices. By bringing machine learning algorithms and models to the edge of the network, TinyML enables devices to make intelligent decisions and perform complex tasks independently and efficiently. The field of TinyML has seen significant advancements in hardware, software, and optimization techniques, paving the way for a new era of smart and autonomous devices. As the technology continues to evolve, we can expect TinyML to play an increasingly crucial role in various industries, transforming the way we interact with and benefit from intelligent technology.